How Finalyse can help

Unlock the future of insurance with our complimentary educational workshop diving deeply into AI and Machine Learning.

Combining a technological and practical approach to deliver actuarial and risk modelling solutions

Drafting an AI Risk Management Policy – A Beginner’s Guide for (Re)Insurers

Gary is a Principal Consultant within our insurance practice in Dublin. He has 16 years of experience within the life and non-life (re)insurance sectors covering industry, audit and consultancy roles. His expertise covers financial reporting, prudential and conduct risk management, and assurance activities. Gary has provided outsourced actuarial, risk, compliance, and internal audit function services for a wider range of insurers, reinsurers and captives.

Introduction

As artificial intelligence reshapes the insurance landscape, Boards and control functions grow increasingly cautious about the risks posed by rapidly evolving technologies. At the same time, innovators grow frustrated as outdated governance frameworks stifle progress. Striking a balance between innovation and oversight begins with the fundamentals - establishing a well-structured AI Risk Management Policy. But where should insurers start? This article offers a practical guide to developing an AI Risk Management Policy while also exploring key insights from EIOPA’s recent Opinion on Artificial Intelligence and Risk Management.

Scope & Definitions

AI models are inherently complex, and unclear terminology only adds to the challenge. A strong AI Risk Management Policy should establish clear, company-wide definitions of key terms to prevent misunderstandings and misapplications.

Beyond definitions, the Policy should outline which AI systems fall within its scope. This may include underwriting and reserving models, claims automation tools, customer chatbots, and other AI-driven processes.

Importantly, the Policy must specify whether it applies solely to in-house AI or also covers third-party AI solutions integrated into the insurer’s operations.

Relationship with the Holistic Risk Management Framework

While a standalone AI Risk Management Policy may be necessary for many insurers, it should not exist in isolation from the broader Enterprise Risk Management Framework.

Insurers should consider whether the AI Risk Management Policy functions as a subset of the overarching Risk Management Policy and how it integrates with existing governance structures. Crucially, its development must align with the firm’s Risk Appetite and internal controls framework.

Policy Governance

Like any governance document, the AI Risk Management Policy should clearly define how often it is updated, who is responsible for maintaining it, and whether approval rests with the Board or a designated subcommittee, such as the Risk Committee.

While an annual review is standard practice for most policies, the rapid evolution of AI and insurance use cases may necessitate more frequent updates.

Roles & Responsibilities

The Board holds ultimate accountability for AI strategy, AI risk management, and oversight. The AI Risk Management Policy should clearly define any responsibilities delegated to its sub committees.

For larger and more sophisticated insurers, appointing a Chief AI Officer may become standard practice. Where such role exists, the Policy should clearly determine the extent of risk management responsibilities expected. In some cases, firms may establish a dedicated AI Committee based on the nature, scale, and complexity of their AI-driven operations.

The policy should also recognise the responsibilities of key role holders in AI risk management:

- Data Protection Officers – Ensure AI systems processing personal data comply with data protection regulations and codes of conduct.

- Compliance and Audit Function Heads – Oversee regulatory compliance for AI systems across the organisation.

- Head of Actuarial Function – Assess the quality of data and systems used in calculating technical provisions and underwriting decisions.

- Chief Risk Officer (CRO) – Differentiate between first- and second-line AI risk management responsibilities, ensuring appropriate oversight structures are in place.

Maintaining an Inventory of AI Systems

Insurers should maintain a comprehensive inventory of all AI systems in use. At a minimum, the inventory should classify AI systems by level of risk in keeping with Articles 5 and 6 of the AI Act:

- Unacceptable Risk

- High-Risk

- Limited Risk

- Minimal Risk

While the inventory itself does not need to be included within the AI Risk Management Policy, the Policy should clearly define:

- Who is responsible for updating the inventory;

- How updates are made; and

- The frequency of updates.

Although multiple stakeholders may contribute to maintaining the inventory, best practice is to assign ownership to a designated individual to ensure consistency and accountability.

Regulatory Compliance Strategy

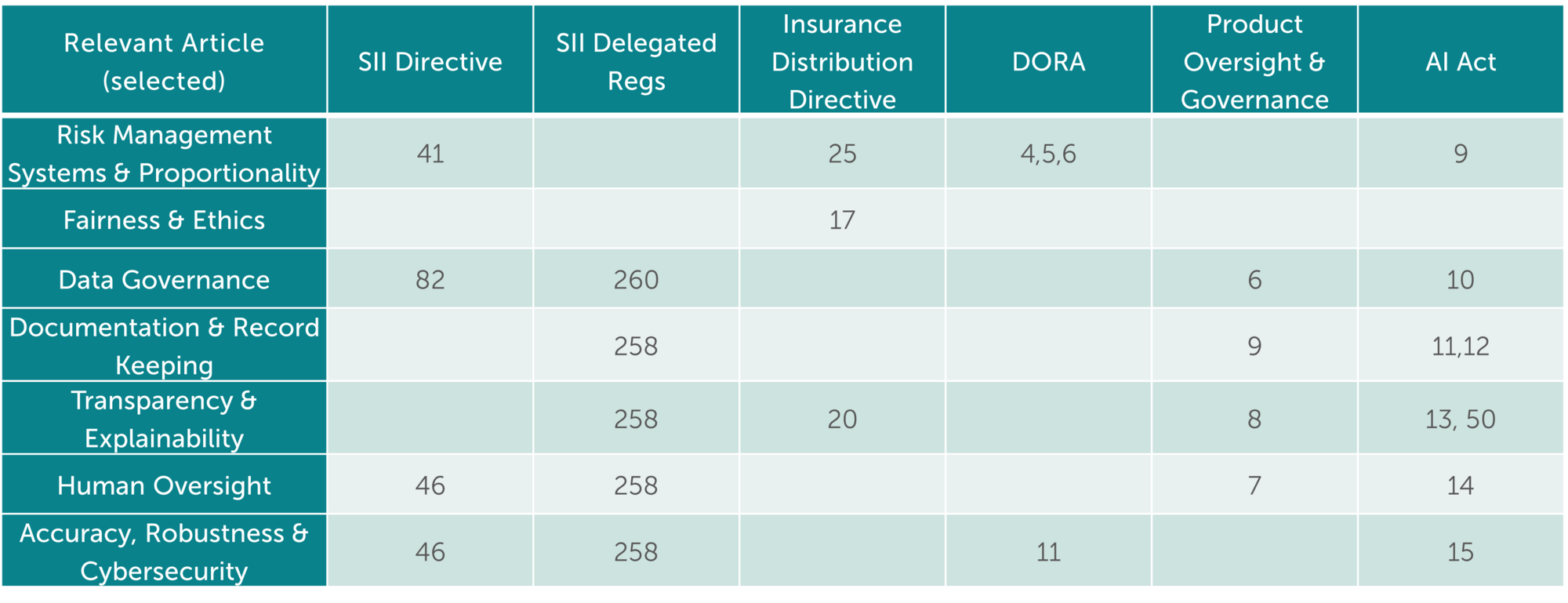

The AI Act has introduced significant obligations for providers and deployers of High-Risk AI systems. However, EIOPA’s recent Opinion on Artificial Intelligence and Risk Management has highlighted that many existing laws and regulations already apply to AI systems used in insurance. Some of these regulations will require new interpretations as AI adoption increases.

An AI Risk Management Policy must account for the full spectrum of relevant laws, regulations, and governance requirements. The table below highlights key regulatory articles from various EU regulations that insurers should assess.

This list is certainly not exhaustive - insurers must also consider regulations such as GDPR, local consumer protection codes, and guidance from national regulatory authorities. Additionally, some insurers must align with group-level governance requirements, which should be explicitly addressed in the AI Risk Management Policy.

The following sections explore the topics introduced above in further detail.

Risk Management Systems & Proportionality

AI risk management systems should be proportionate to the nature, scale, and complexity of the AI system in question. For example, a black-box neural network used to underwrite life insurance policies at scale poses significant risks to both customers and the insurer’s financial stability. In contrast, an AI-powered email spam filter carries minimal risk and is unlikely to cause widespread adverse outcomes.

Proportional risk management is already an established principle in insurance regulation. The Solvency II Directive (Article 41), Insurance Distribution Directive (Article 25), and DORA (Articles 4, 5, and 6) all emphasise the need for governance systems that reflect the scale and complexity of the risks they manage.

Once an AI system inventory is created, insurers will likely identify multiple AI systems with varying levels of risk. In practice, a case-by-case proportionality assessment may be required. The AI Risk Management Policy should establish a structured framework to evaluate the risk level of each AI system and provide guidelines on appropriate risk management measures.

The risk assessment framework may consider factors such as:

- Volume and sensitivity of the data processed;

- Autonomy of the system;

- Potential for discrimination or adverse consumer impacts;

- Ability to disrupt critical business functions;

- Financial impact on the insurer;

- Explainability of the system’s outcomes; and

- Degree of reliance on the system—whether it directly informs decisions or serves as a secondary validation tool.

Article 9 of the AI Act outlines risk management requirements for High-Risk AI systems. While these requirements are mandatory for high-risk AI, insurers may choose to apply certain elements of this framework to other AI systems as well. Notably, Article 9 requires risk management processes to operate across the full lifecycle of AI models, ensuring continuous oversight from development to deployment and beyond.

Fairness & Ethics

Article 17 of the Insurance Distribution Directive (IDD) mandates that insurance distributors must always act honestly, fairly, and professionally in the best interests of their customers.

To uphold these principles, the AI Risk Management Policy should include:

- Measures to prevent algorithmic bias and discrimination;

- Fairness monitoring strategies, including defined fairness metrics;

- Commitment to diversity in AI model training datasets, along with protocols to ensure data is complete and free from bias; and

- Minimum standards for explainability of AI model outputs.

The policy should further align with relevant fairness regulations and codes of conduct. For instance, the 2012 EU Court of Justice ruling on Gender Rating prohibits the use of gender as a premium rating factor. Compliance with this ruling has been relatively straightforward using traditional pricing models, but black-box AI models may present new challenges. Advanced AI systems may develop proxy rating factors that indirectly reflect gender, even when gender is not explicitly included in the training dataset.

Additionally, the EIOPA Opinion highlights concerns about differential pricing practices, emphasising the need for insurers to develop strategies to mitigate such risks and ensure fair treatment of consumers.

Data Governance

Most insurers have well-established data governance practices with several regulatory frameworks already setting data quality and governance standards.

- Article 82 of the Solvency II Directive establishes requirements for the quality of data used in technical provisions.

- Article 260 of the Solvency II Delegated Regulations mandates governance policies for data used in underwriting and reserving.

- Article 6 of the Product Oversight & Governance requirements compels quantitative stress testing of certain insurance products, which must rely on appropriate data sets.

Article 10 of the AI Act sets new data governance obligations for High-Risk AI systems. Depending on an insurer’s risk profile, some of these requirements may also be adopted for other AI systems as a best practice.

At a minimum, the AI Risk Management Policy should establish strong governance standards for data used in training and testing AI models, ensuring appropriate measures are in place to eliminate bias. While data cleansing and transformation can help reduce bias, insurers should implement controls to prevent unintended management bias from influencing the data transformation process.

The policy should clearly state that data governance standards apply to both internal and externally sourced data.

Finally, insurers must ensure that the AI Risk Management Policy aligns with existing Data Protection & Privacy Policies to maintain regulatory compliance and avoid conflicts between governance frameworks.

Documentation & Record Keeping

Maintaining detailed records is essential for accountability and auditability, as outlined in Article 258 of the Solvency II Delegated Regulations and Article 9 of the Product Oversight & Governance requirements.

High computational power and automation of AI systems may render traditional manual record-keeping protocols insufficient. To address this, Article 12 of the AI Act mandates that High-Risk AI systems must automatically log key information, such as input data used and system performance monitoring.

The extent of automatic logging required on other AI models will depend on the nature, scale, and complexity of each AI system. At a minimum, the AI Risk Management Policy should require an "auditability by design" approach for developing and procuring AI models.

Additionally, insurers should establish clear policies for maintaining:

- AI validation reports;

- Incident response records; and

- Development history logs.

Transparency & Explainability

Effective governance regimes rely on measurable and monitorable metrics. AI assisted decision-making must be transparent with underlying models explainable.

- Article 258 of the Solvency II Delegated Regulations requires insurers to implement information systems that generate complete, reliable, clear, consistent, timely, and relevant business data.

- Article 20 of the Insurance Distribution Directive (IDD) mandates that insurers provide objective and comprehensible information to help customers make informed decisions. If intermediaries are involved, Article 8 of the Product Oversight & Governance requirements obligate insurers to supply distributors with appropriate product details.

- The AI Act further requires that individuals must be explicitly informed when they are interacting with an AI system, regardless of the use case.

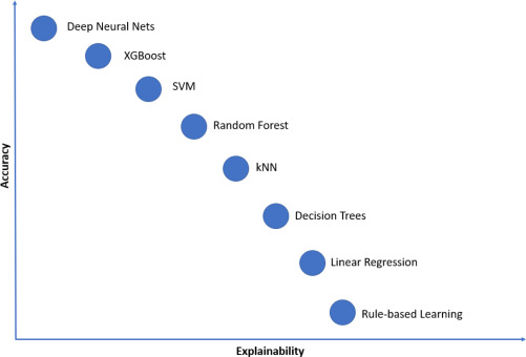

The degree of explainability required will vary depending on:

- The potential impact of the AI system (e.g., customer-facing decisions vs. internal processes);

- The complexity of the model (e.g., simple decision trees vs. black-box neural networks); and

- The stakeholders relying on AI outputs (e.g., customers, regulators, or internal teams).

Not all machine learning models offer the same level of interpretability. Neural networks are highly accurate but often lack explainability. Decision trees, in contrast, are typically more transparent and easier to interpret.

The AI Risk Management Policy should outline the insurer’s appetite for using less explainable models and define the conditions under which they may be deployed. For example:

- Less explainable models may be unsuitable for AI systems that could negatively impact customers (e.g., underwriting or claims decisions).

- More relaxed rules may apply to back-office AI applications with limited risk exposure.

- AI models used to verify or challenge traditional models may also follow a different governance approach.

Where less explainable models are used, explainability tools should be leveraged to mitigate risks to within acceptable thresholds. The Policy should define clear criteria for this. EIOPA’s Opinion references widely used explainability tools such as LIME and SHAP, but also stresses the importance of documenting their limitations.

The Policy should also establish strict guidelines for human oversight of less explainable models to prevent unintended biases and errors.

Additionally, the Policy can be strengthened by distinguishing between:

- Global explainability (e.g., explaining how an AI model determines overall reserve values).

- Local explainability (e.g., clarifying why a specific AI-driven decision impacted an individual policyholder).

An illustrative Accuracy vs Explainability trade-off of some common machine-learning models

Human Oversight

Article 4 of the AI Act mandates that firms ensure an adequate level of AI literacy among their staff. The AI Risk Management Policy should establish a training framework tailored to the needs of employees across different functions.

At a minimum, the Board of Directors must have a sufficient understanding of how AI is used within the organisation and the associated risks. More specialised training programs can be developed based on AI use cases and individual roles.

The Policy should also define expectations for human oversight, particularly in:

- Identifying and mitigating biases in AI models; and

- Enhancing model explainability for decision-making.

Article 258 of the Solvency II Delegated Regulations requires insurers to maintain qualified and competent staff for control functions. The AI Risk Management Policy should specify the necessary AI literacy levels to comply with these obligations.

Additionally, Article 7 of the Product Oversight & Governance rules requires insurers to continuously monitor and review their insurance products. This process becomes more complex when AI models are involved, making it crucial for the Policy to define the appropriate level of human oversight for AI-driven products.

Finally, Article 14 of the AI Act states that High-Risk AI systems must be designed with human-machine interface tools to ensure appropriate oversight and intervention capabilities.

Accuracy, Robustness & Cybersecurity

AI systems must be secure, consistent, and reliable throughout their entire lifecycle—not just at deployment.

- Article 258 of the Solvency II Regulations mandates that insurers safeguard the security, integrity, and confidentiality of information.

- Article 11 of DORA outlines ICT business continuity requirements, which should be aligned with AI governance policies. While firms may already have business continuity plans, the AI Risk Management Policy should clearly define how AI-specific risks integrate into these existing frameworks.

The Policy should outline:

- Performance metrics to monitor AI systems over time;

- Frequency of monitoring and the responsible oversight functions; and

- Fairness metrics for AI models that impact consumer outcomes.

To mitigate risks, the Policy should establish minimum cybersecurity requirements for AI systems, with heightened protections for models handling large volumes of sensitive data.

For High-Risk AI systems, Article 15 of the AI Act must be followed, ensuring compliance with security and reliability standards.

Additionally, the Policy should address protocols for:

- Pre- and post-deployment testing to validate model accuracy and robustness.

- Controlled decommissioning of outdated AI systems.

Consistency and reliability should be the foundation of AI models used in insurance. The Policy should set high standards for rigorous testing to ensure AI models can:

- Withstand scrutiny across varied datasets;

- Deliver reasonable results even in unforeseen scenarios; and

Prevent overfitting, particularly in more sophisticated models.

Conclusion

As AI continues to transform the insurance industry, establishing a robust AI Risk Management Policy is essential to balancing innovation, governance, and regulatory compliance. Finalyse recommends that insurers proactively address AI-related risks while ensuring fairness, transparency, and accountability in their AI-driven decision-making processes.

How Finalyse can help

Our team of experts helps firms navigate the complexities of AI risk by offering:

- AI Risk Management Policy Development – Assisting insurers in drafting policies that align with regulatory frameworks such as the AI Act, Solvency II, DORA, and IDD.

- AI Inventory & Risk Classification – Supporting insurers maintain a fit for purpose and compliant register.

- Independent Review of Governance Policies – Performing gap analysis of the firm’s established policies against prevailing market best practice.

- AI Model Validation & Governance – Providing independent validation and review of AI models to ensure fairness, transparency, and robustness.

- Data Governance & Bias Mitigation – Implementing strategies to enhance data quality, prevent bias, and maintain regulatory compliance.

- Audit Documentation – Ensuring audit trails are appropriate and aide transparency requirements.

- Human Oversight & Explainability Frameworks – Developing tailored approaches to monitor AI-driven decision-making and ensure accountability.

Please reach out to one of our experienced Finalyse consultants at insurance@finalyse.com.